Following from last week’s discussion on InfiniBand and Ethernet, I am diving a little deeper in RDMA today.

The idea behind CPU Offloading is simple and very effective in high performance environments. Direct Memory Access (DMA) allows an application to access memory directly without using the OS Kernel and the CPU. This improves the performance of the application itself and frees up the CPU for other processes.

Taking this a step further, applications communicating over a network may require access to memory on a remote node. This is achieved using specialised Network Interface Cards (NIC) supporting Remote Direct Memory Access (RDMA), which as the name suggests allows applications to access memory on remote nodes.

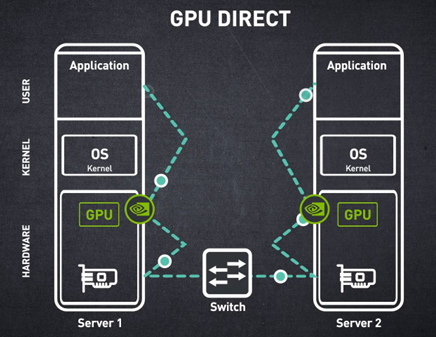

Critically, this process works very well for GPUs architectures. GPUs have their own high bandwidth memory, so local applications using the GPU leverage DMA within a node. However, AI training clusters, HPC and Message Passing Interfaces (MPI) applications require many nodes and as a result, will rely heavily on RDMA for optimal performance.

RDMA is provided natively as part of the InfiniBand protocol, which is one of the reasons why IB has been prevalent in AI and HPC environments. However, most Ethernet vendors will now support RDMA over Converged Ethernet (RoCE). RoCE comes in two flavours, RoCEv1 operates at the Layer 2, restricting RDMA to the local subnet, while RoCEv2 operates at Layer 3, allowing for greater scale.

And finally, the Ultra Ethernet Consortium (UEC) is avoiding the word “over” altogether and taking a clean slate to RDMA. The new Ultra Ethernet Transport (UET) uses an ephemeral connection, which does not require a handshake and only maintains state for the duration of the transaction, which reduces latency and improves performance.

I hope this brief explanation was helpful and as usual, set me straight if I’ve gotten anything wrong and let me know if you have questions.

Thank you to NVIDIA for the diagram, from their very useful training materials.

.svg)